Two friends meet after a long time and greet each other like [source motion]. What if one of the friends becomes overly touched upon meeting the other friend?

Inter-MT2: Interactive Multi-Turn Motion-Text Dataset

Inter-MT2: Interactive Multi-Turn Motion-Text Dataset

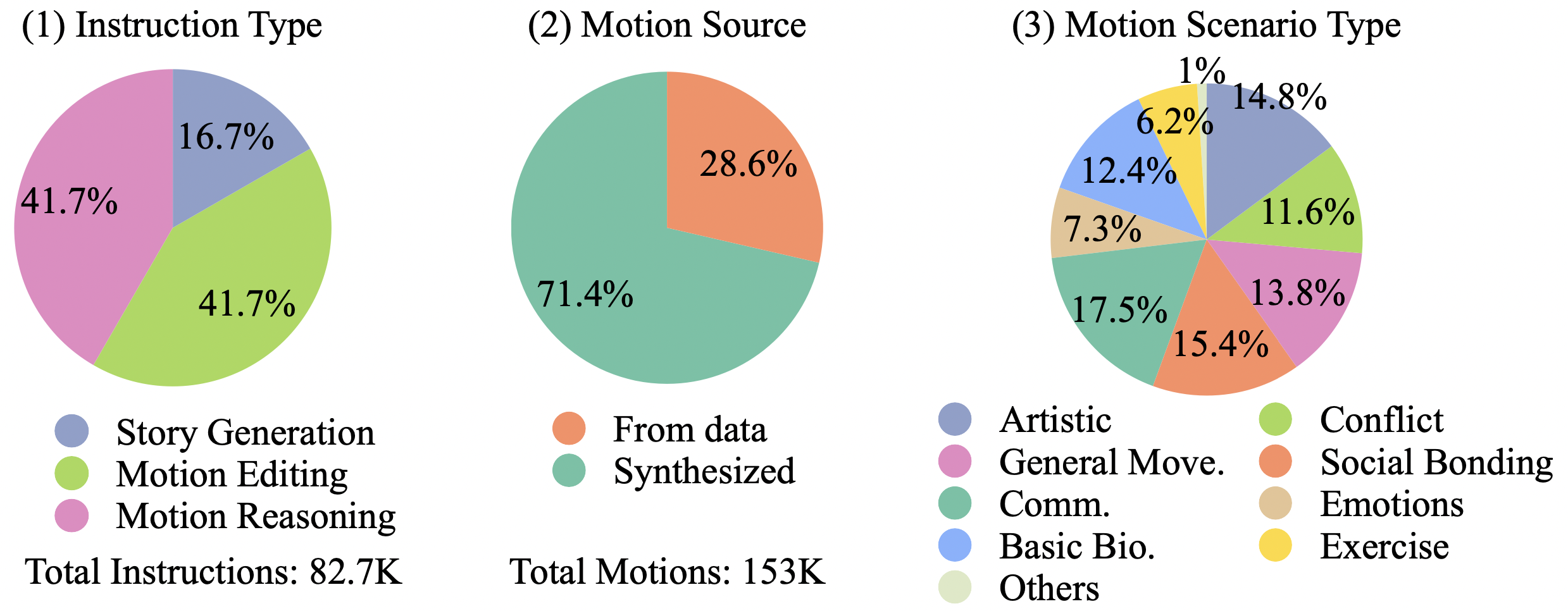

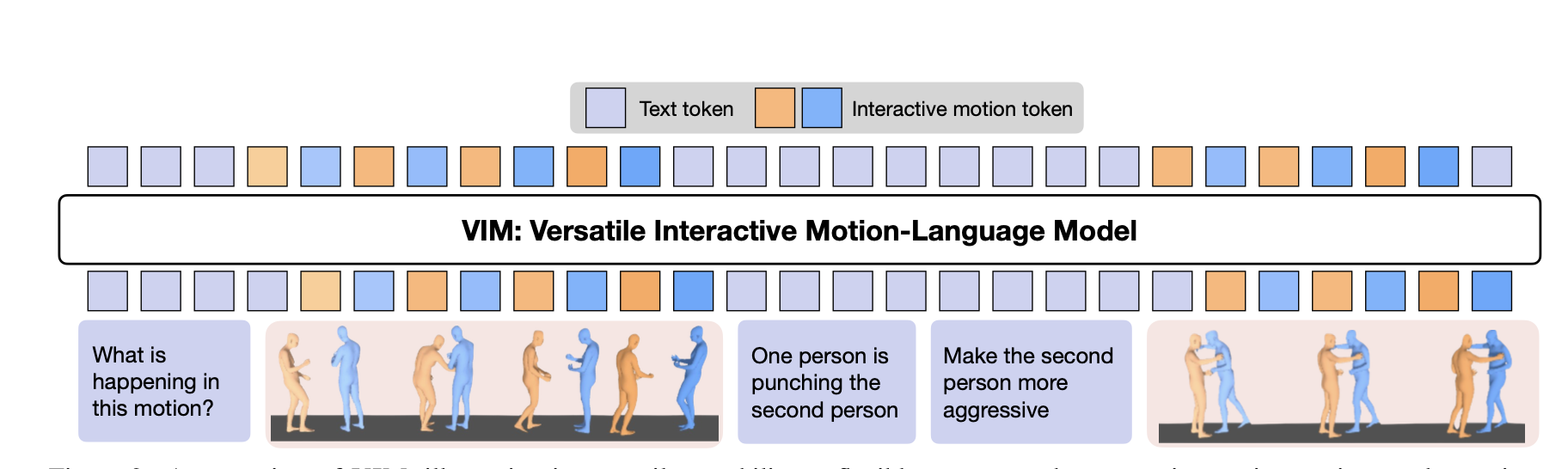

Current datasets (Inter-X, InterHuman) for modeling interactive motions lack sufficient diversity in instructions and do not include multi-turn conversations. To address this gap, we introduce INTER-MT2: INTERactive MUTI-Turn Motion-Text dataset. This dataset covers a variety of interactive motion scenarios with multi-turn conversations, diverse instructions, and spatiotemporally aligned motions between two individuals.

- Generate Motion Captions & Instructions. We employ GPT-4o to generate motion captions and conversational instructions for a variety of tasks, such as motion editing, reasoning, and story generation, enhancing the model’s versatility.

- Generate Corresponding Motion. We utilize the state-of-the-art text-to-motion diffusion model, InterGEN, to generate corresponding motions that align with the generated caption from LLMs.

Inter-MT2 Pipeline

Our pipeline creates samples in two ways. First, starting with a dataset motion, we generate a caption and instruction and then use InterGEN to synthesize a matching motion, yielding both the original and synthesized motions with the instruction. Alternatively, we generate two captions and instructions to synthesize two motions, producing samples entirely from synthesized motions. This method blends data-sourced and generative motions for reliable interactive motion modeling.

Overall, we collected 82K multi-turn conversations, including 96K synthesized and 56K real motions. Figure 2 shows statistics and samples from our Inter-MT2, where motion scenarios are classified using a large language model with motion captions. We aim to showcase spatiotemporally aligned motions between two individuals, summarizing everything on the project page.